When using AI to generate images like Stable Diffusion or DALL-E 3, you tend to encounter issues like “vague patterns are output instead of letters'' and ''short words are written differently.'' There is a heated debate on the news site Social Hacker News on why AI is inefficient at generating images in “text output”.

Ask HN: Why can't image generation models spell? | Pirate News

https://news.ycombinator.com/item?id=39727376

Here is an example of creating an image with text using image generation AI. Equipped with DALL-E 3Image makerCreates an image containing the prompt “An image of the exterior of a ramen shop with the name “Ramen Fantasy” written on it. As a result, the phrase “Ramen Fantasy” is not output, and the misspelled word “RAIMEN” is generated. Correct or a puzzle is created. A kanji-like pattern was output.

Japanese characters appear to be converted to English and processed, so change the prompt to “Image of the exterior of a ramen shop with 'Ramen Eater' written on it” to create an image with English words. The results generated are lower. “Eater” became “eater”.

The problem of generating images in which the AI cannot correctly output sentences seems to be worrying users around the world, and the social news site Hacker News posted: “I wanted to create an image that included my son’s name, but the image was misspelled. Although The name is only 5 letters long. Why is the AI misspelling the image?” It received many comments.

Familiar with artificial intelligenceBranwen next to meHe explained the reasons why AI is not good at generating characters, such as “many image generation AI models are not able to learn text well” and “because they do not take character output into account when encoding prompts.”mention itHe is.

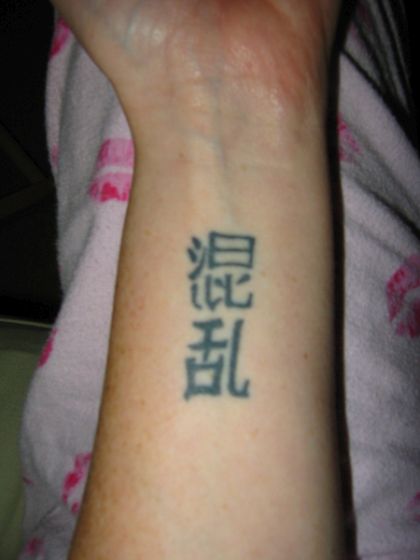

In addition, Barking Cat explained the position that “the training data for the image-generating AI does not include enough textual information,” and “if an English artist who does not know Japanese at all creates a tattoo that includes kanji, he may not know what kanji looks like.” Even if they are Japanese, they don't know how to write kanji, so they can draw funny tattoos.”clarificationa job.

In addition, developers of image generation AI models are also aware of the problem of “not being able to produce sentences well”, and research and development is progressing to improve the generation accuracy. For example, “Stable Diffusion 3” announced in February 2024 is attractive for its ability to output sentences accurately.

High-quality AI image generation “Stable Diffusion 3” has been announced, which is able to achieve “specific character imaging” and “multi-subject imaging” with high precision, which AI image generation is weak – GIGAZINE

Copy the title and URL of this article

“Travel maven. Beer expert. Subtly charming alcohol fan. Internet junkie. Avid bacon scholar.”

More Stories

New and changed features added in ChromeOS 124 |

The “FFXIV: Golden Legacy Benchmark” is scheduled to be replaced in the second half of the week of May 23. Many bugs such as character creation screen, etc. have been fixed.

Sleep without your iPhone alarm going off! ? Causes and Countermeasures to Wake Up with Peace of Mind – iPhone Mania