Want to change AI-generated images of yourself in the right places in a matter of seconds – and make a cat blink, for example? DragGAN makes that possible.

Imagine you are using AI to create an image for your next advert and you are not entirely happy with the product placement, perspective or facial expression of the person in the image. Now imagine that you can simply select the relevant areas using the drag and drop method and adjust them so that the image matches your imagination. This is exactly what a new technology called DragGAN offers. This is still a theoretical project – Developed by the Max Planck Institute for Informatics, the Saarbrucken Research Center for Visual Computing, Interaction, and Artificial Intelligence, MIT, the University of Pennsylvania, and the Google AR/VR Team. However, when integrated into an image processing product, DragGAN can compete with established services such as Photoshop, Canva, and the like. The method is effective and suitable for various application scenarios.

What is DragGAN and what is behind this method?

In the DragGAN method, GAN stands for Generative Adversarial Networks, which is a framework for machine learning models with two neural networks. This type of framework is often used to deploy generative AI. The team of experts around Xingang Pan, Ayush Tewari, Thomas Leimkühler, Lingjie Liu, Abhimitra Meka, and Christian Theobalt also relied on GAN to develop the DragGAN method. With this method, computer-generated images can be easily modified using a drag-and-drop process, where users mark points and then make any adjustments to them. Directions, sizes, seating, perspectives, and the like can all be easily adjusted—you can even make the cat wink. This method is still in theoretical development, but the paper specifically states:

In this work, we study a powerful but less exploratory method for controlling GANs, i.e. “drag” any points of the image to precisely reach the target points in an interactive way with the user, as illustrated in Figure 1. To achieve this, we propose DragGAN, which consists of two components Two key findings including: 1) feature-based motion control that drives the handle point to move toward the target position, and 2) a novel point-tracking approach that leverages discriminatory GAN features to maintain position localization of handle points. Through DragGAN, anyone can distort an image with precise control over where the pixels go, thus manipulating the position, shape, expression, and layout of various classes such as animals, cars, humans, landscapes, etc.

Also an artificial intelligence expert Jens Polomsky LinkedIn reports on the method And you name their capabilities for digital operations such as the creative creation and image processing of social media posts or the end-to-end processing of your own assets for various purposes – whether at the agency, in the media house or in the e-commerce store.

You can select a few points on the image and indicate where you want these points to move and the animation changes and moves to the desired location. This would be a very easy way to manipulate images without much technical or software knowledge.

You can find more ideas about the method in the paper “Pull Your GAN: Point-Based Interactive Processing in the Generative Image Manifold”.

A new era of image processing has dawned

Thanks to the latest advances in artificial intelligence and especially generative AI, social media managers, creative ad designers or, for example, visual adjusters for online shops have more and more options to create meaningful, playful and tailored visuals. Thanks to the support of artificial intelligence, Canva, for example, with its Magic Edit demo version, offers the ability to create image inserts with word descriptions in a matter of seconds.

Photo editing has never been so fun and creative. Let your imagination run wild.

How will you use this feature? pic.twitter.com/OZoGRItrpo

– Canva (canva) April 27, 2023

With the help of image descriptions, users can also have their own visuals created with Bing Image Creator.

Introducing image creation in the new Bing and Edge. You can now use Bing Chat to create images with just your words. https://t.co/mCSrEADARl pic.twitter.com/SF1jmkF1aZ

– Yusuf Mahdi (yusuf_i_mehdi) March 21, 2023

This also works with, for example, the tools Midjourney or Adobe’s Firefly. With these, AI educator Chris Kashtanova from Columbia University even produced an AI-generated comic.

I started working on a new comic book with the help of AI and recorded a tutorial for you on how to make such a comic book in Midjourney. I created a previous tutorial for Adobe Firefly and some of you asked for Midjourney. Will post in a few hours.

I called this “Different… pic.twitter.com/ovYRYFeDH2– Chris Kashtanova (@icreatelife) May 17, 2023

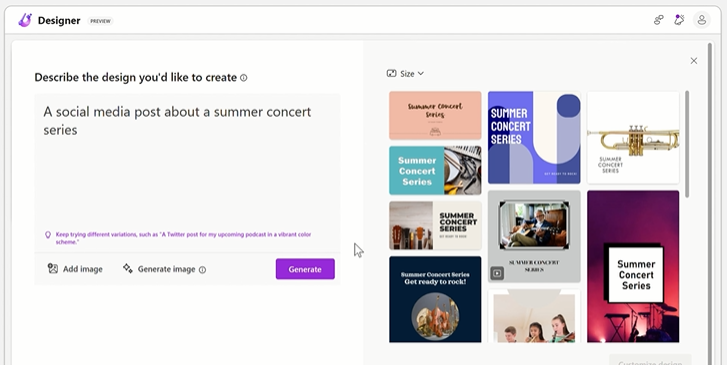

The creative implementation of design ideas is now also possible through the so-called Microsoft Designer, an AI-based tool that allows visual creation at lightning speed.

AI image editing and creation also makes ad creation easier

Big tech companies are currently busy developing tools and templates to be able to create and edit visuals as easily as possible. This also has a positive effect on the efficiency of creative creativity in the context of advertising. Meta, for example, already provides an AI protection mechanism for AI-powered ads. The group is also using a new model in the field of artificial intelligence: the Division of Anything Model, or SAM for short. This can identify objects in photos and videos, even if it has never encountered the objects before. Users can either click on items in the image or search for items by entering text. For example, if the word “penguin” is entered, all penguins that can be found in the picture are marked with a flag.

In addition to Meta, Google, Amazon and Co. We are making it easier for advertisers – and retailers – to create images with artificial intelligence tools. This will fundamentally change the creative production.

What tools and methods do you use for your purposes when creating visuals? Let us know in the comments.

Advertising at the next level:

Google, Meta, and Amazon usher in the era of AI-generated advertising

“Certified tv guru. Reader. Professional writer. Avid introvert. Extreme pop culture buff.”

More Stories

AI-powered traffic lights are now being tested in this city in Baden-Württemberg.

The use of artificial intelligence in companies has quadrupled

AI Startup: Here Are Eight Startup Ideas