Stable propagation of image generation AI requires GPU and VRAM with sufficient performance, so it is necessary to use a high-spec computer or workstation or access the GPU server to borrow computing resources. Machine Learning Compilation, which offers machine learning lectures to engineers, can run Stable Diffusion in the browser without the need for server support.Stable web deploymentis published.

WebSD | house

https://mlc.ai/web-stable-diffusion/

A beta version of Web Stable Diffusion has been released, but at the time of writing it is confirmed to only work on Macs with M1 or M2. This timeM1 with iMac(8-core CPU, 8-core GPU, 256GB storage, 16GB RAM model) I’ve already run the demo.

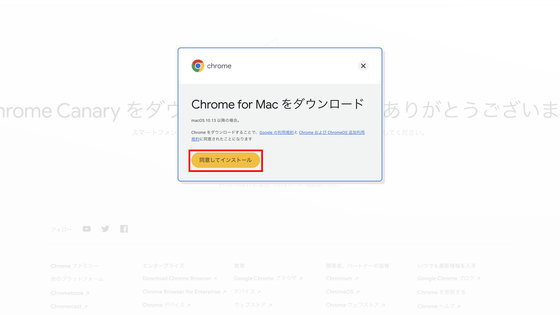

Web Stable Diffusion Beta is a beta version of Google Chrome at the time of writingChrome CanaryFirst of all, Chrome Canary’sDistribution pageGo to and click on “Download Chrome Canary”.

Click Agree and Install to download the DMG Format Installer. The size of the installer file is 218.6 MB.

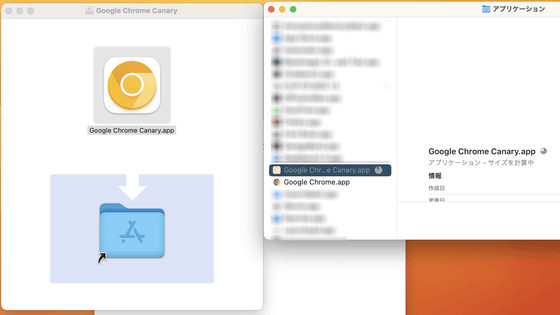

Run the installer and install Google Canary.

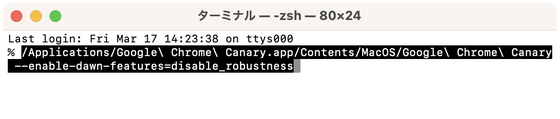

Then at the station/ Applications / Google \ Chrome \ Canary.app/Contents/MacOS/Google \ Chrome \ Canary –enable-dawn-features = disable_robustnessto launch Chrome Canary.

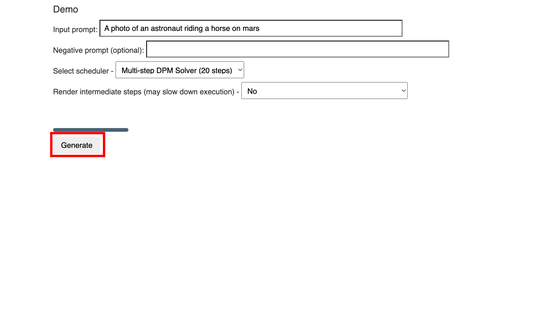

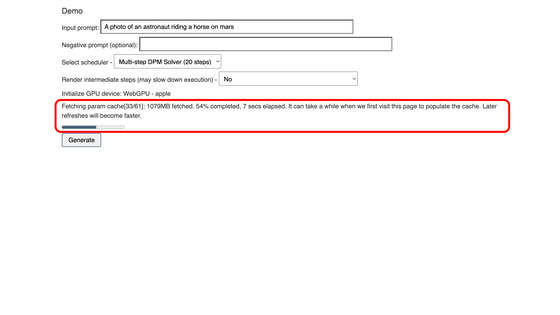

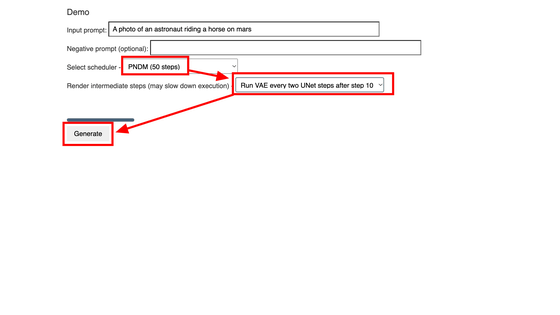

A demo of Web Stable Diffusion is pinned to the “Demo” at the bottom of the page. Insert the router into the “Input Prompt” and the negative router into the “Negative Prompt (Optional)”. This time, click “Create” on the default “Image of an astronaut riding a horse on Mars” option.

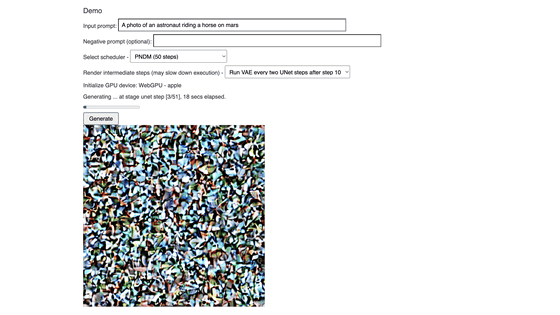

Photo creation has begun.

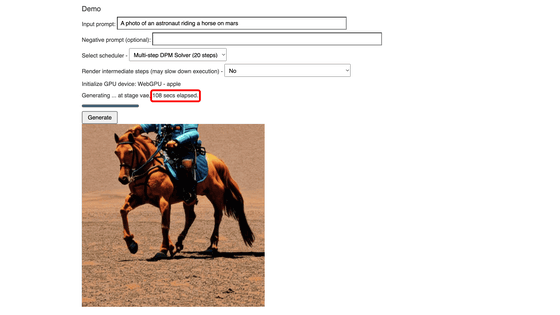

In about two minutes or less, the image was generated as follows.

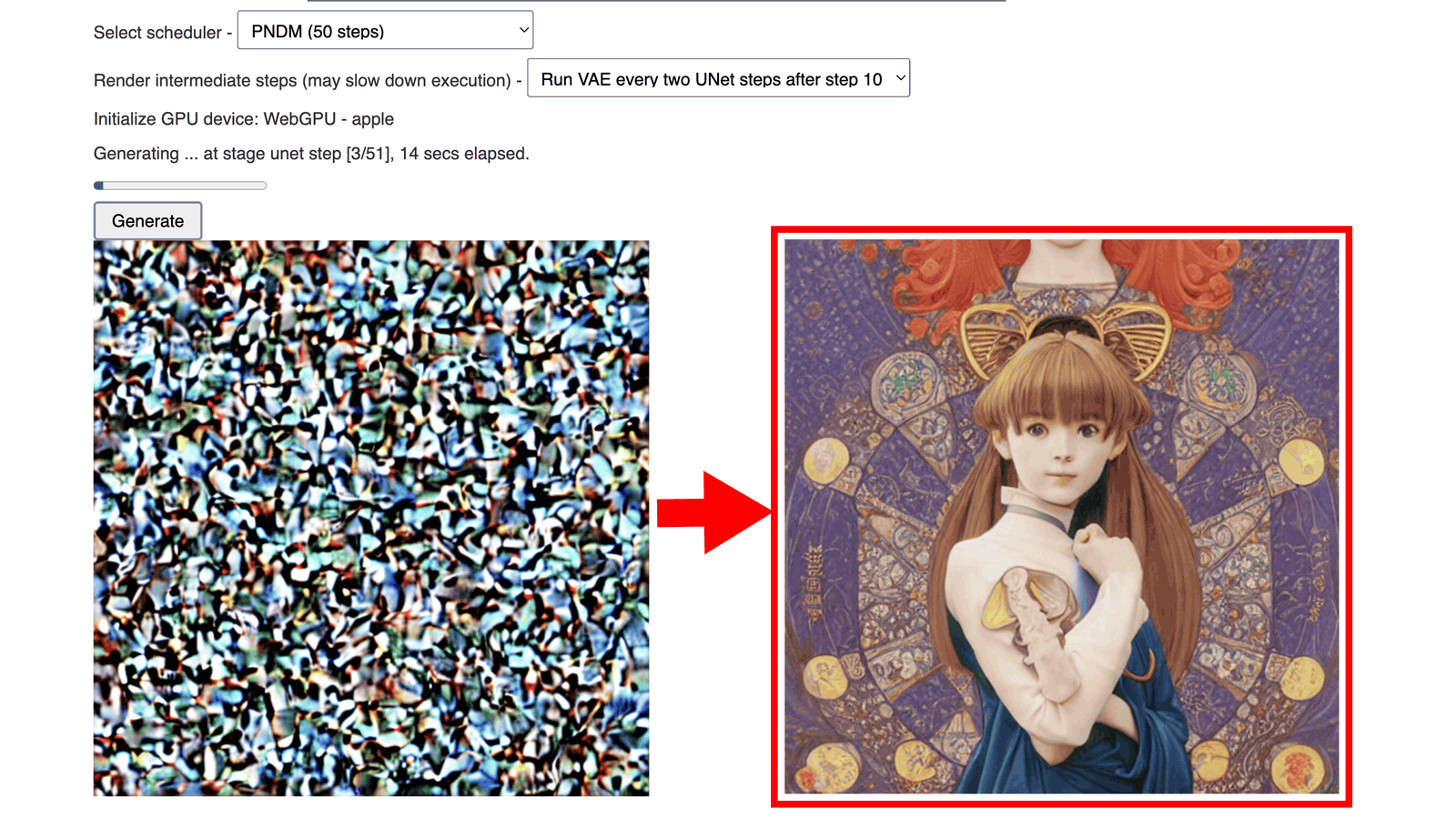

This time, I changed the number of steps of the scheduler using Select Scheduler and the intermediate steps of rendering using Intermediate rendering steps. The number of steps of the scheduler can be specified from 20 and 50 steps, but 50 steps will increase the resolution of the image, so it will take longer to generate. Also, setting an intermediate step in rendering will make the noise visible during generation, but it will also take longer to generate.

generation begins,U-Net encodingWhen moving to a stage, a noise was displayed.

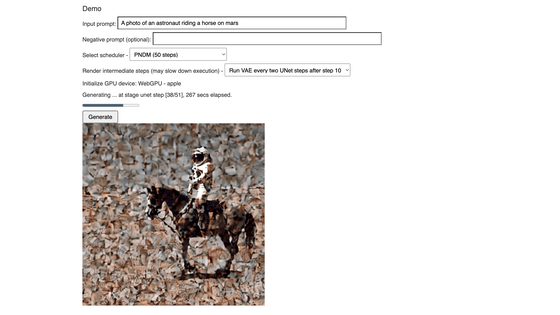

Little by little, the image is created from the noise.

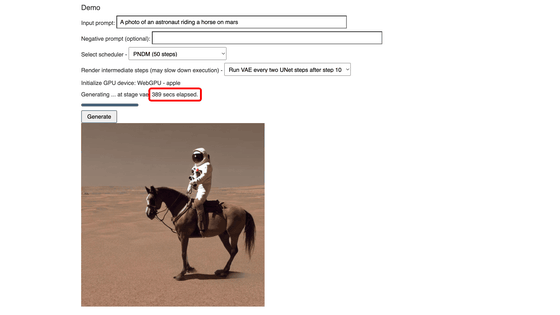

The final image generated is below. “389 seconds elapsed (389 seconds elapsed)” means that it took about 6 and a half minutes to create one image.

Web Stable Diffusion code is posted on GitHub.

GitHub – mlc-ai/web-stabil-diffusion: Bringing stable diffusion models to web browsers. Everything works inside the browser without server support.

https://github.com/mlc-ai/web-stable-diffusion

Copy the title and URL of this article

“Travel maven. Beer expert. Subtly charming alcohol fan. Internet junkie. Avid bacon scholar.”

More Stories

Rocks taken out from NASA's experiment to change the orbit of an asteroid may collide with Mars in the future. Space Portal website |

The Rabbit R1 AI device is officially launched, demonstrating the CEO's grand vision WIRED.jp

“Transformers” and 8 Google employees who changed the history of artificial intelligence WIRED.jp